AI has been everywhere today in 2026. Tools like ChatGPT, Perplexity, and Gemini are changing how the digital world works.

Things we once thought only humans could do, like being creative and writing thoughtfully, are now being done by AI language models too.

Today, AI writes poems and blogs, creates images and videos, and even builds entire websites using code. This shift has clearly changed the market. It has also sparked mixed reactions.

Some people depend on AI tools for quick and detailed answers, while others remain cautious and unsure about how much they should trust these tools.

What is the Impact of AI Tools in Enterprises in 2026

Many enterprises now restrict or closely control the use of AI language models because they worry about privacy leaks, data breaches, copyright issues, etc. Human-written organic content marketing is the most helpful tool, which improves your credibility and builds long-term customer trust.

GPT-style models learn from huge amounts of text, so they can sometimes repeat or blend in copyrighted material without naming the source. Even, OpenAI has already faced several lawsuits that claim it used copyrighted content without proper permission.

When teams use AI to write blogs or articles, the output is often very generic and adds little real value, so search engines may treat it as thin content. On top of that, AI tools can introduce serious factual and numerical errors, a problem known as AI hallucination.

According to reports from AllAboutAI in 2025, AI hallucinations have caused businesses an estimated USD 67.4 billion in losses. Nearly 47% of executives admitted that they have made major financial decisions based on AI-generated content that later became inaccurate.

These risks make many enterprises cautious, which is why they now put more effort into telling human-written and AI-generated content apart and into building strong review and approval processes around AI.

At Das Writing Services, we strictly restrict our writers from using AI tools to write content. We always make sure you get the quality human-written content that ranks higher on Google. Contact our team now and get the free sample!

How to Detect AI Content?

Since AI responses are similar to humans, it is virtually impossible to distinguish human-written and AI-generated writings. This is because most AI-detector tools incorrectly flag human writing as AI somewhere between 5%-30% of the time.

According to a study by the Langedutech journal, a person can use two major techniques to differentiate AI and human-generated text. They are –

1. Stylometric Analysis

2. Metadata Analysis

First, check the writing style, which is called stylometry. Look at word choices, sentence structure, and grammar patterns. Humans tend to vary their style more, while AI sticks to predictable patterns.

Second, dig into metadata. Track where the text came from, including IP addresses, timestamps, and editing history. This can reveal if it originated from an AI tool or by a human.

But both of these methods require professional skills and advanced technological expertise. Even then, the results are not 100% authentic.

AI Content Detector Tools: Fact vs. Myth

The third option is to use AI-based GPT content detector tools, which is quite the irony! We are using an AI tool to identify if a text is generated by another AI! The Internet is flooded with multiple AI checker tools which claim to distinguish human-written texts from AI texts.

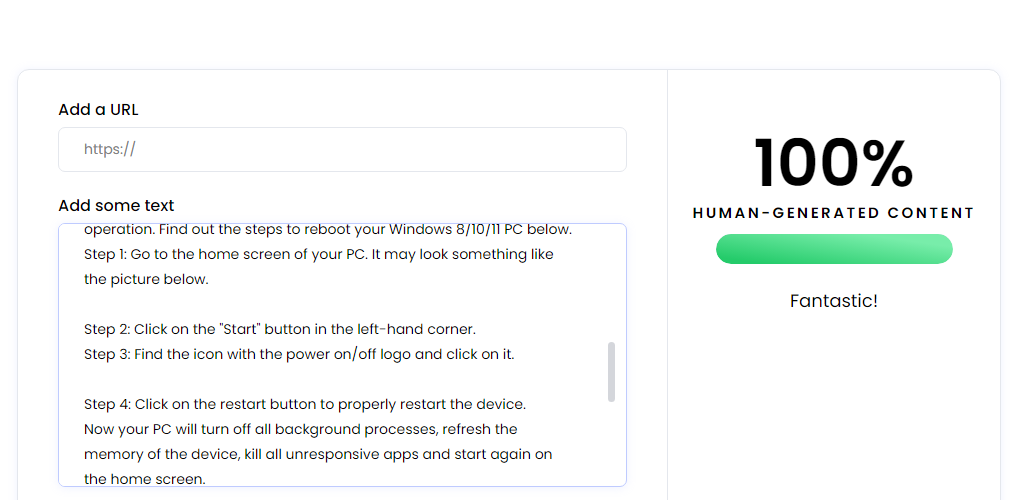

So, we determined to put a few of those tools to the test. We fed one of our blogs into two AI content detector tools, and here are the results.

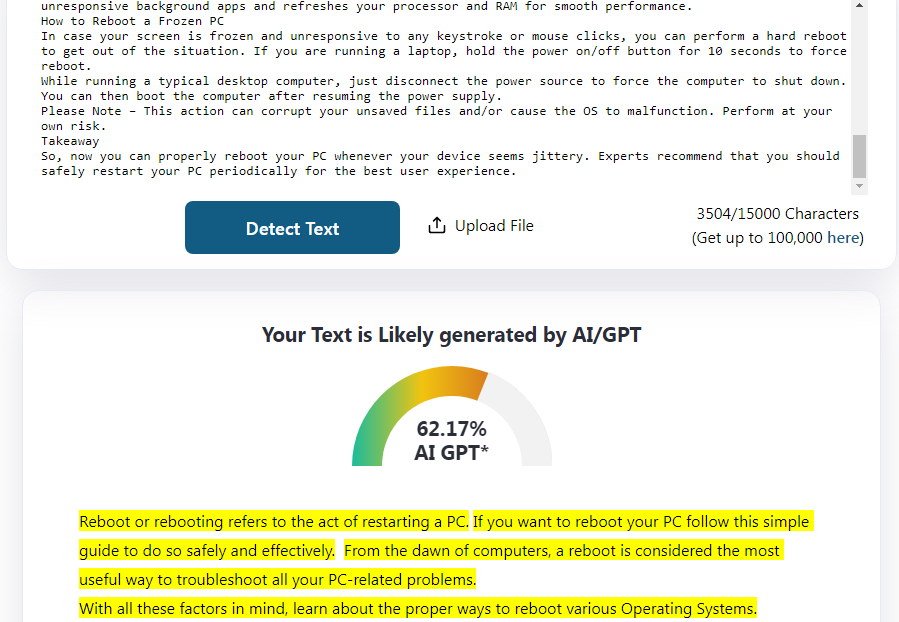

The first tool rightly identified the content as human-written. However, when we used another tool, it showed the opposite result.

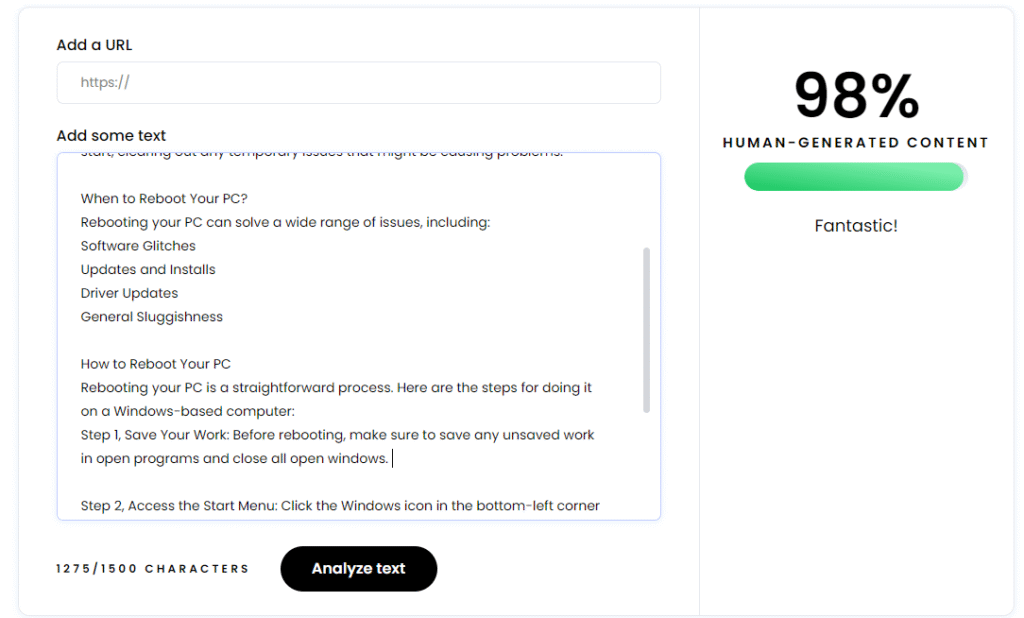

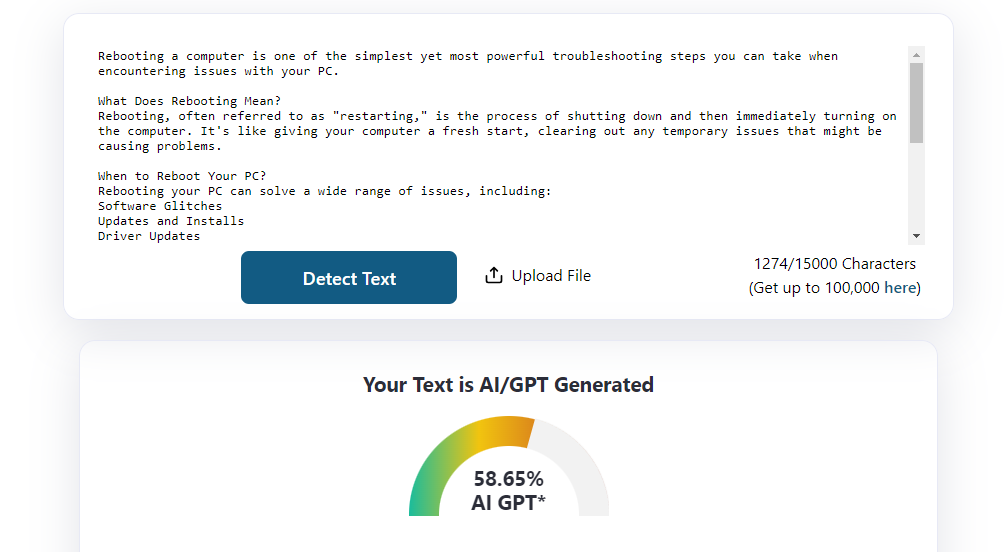

The same text is now being flagged as AI-generated. Conversely, we made ChatGPT 3.5 write a similar blog on the same topic. This time, we removed a few redundant phrases and pasted the same article on the respective AI tools.

Here are the results,

The first tool identified ChatGPT 3.5’s content as a human-generated one, while the second tool flagged it as AI-generated content. If we take into account the percentage, the AI content detector is more certain of the human-generated content as actually AI-generated – 62 per cent. Ironically, it is less certain of the ChatGPT-produced article and only gives it 58 per cent.

It is this questionable accuracy of AI detector tools that led OpenAI, the founding organisation of ChatGPT, to withdraw its AI content detection tool.

We have also created a blog on the future of ChatGPT in content writing, so if you want more on that, you can click here.

Analysing the Results

First of all, as we pointed out above, AI or GPT detector tools can often be wrong. Even at its best, the tools will have room for errors. Research done by Cornell University suggests that AI detection tools tend to be biased against non-native English speakers. This is because most AI detector tools use text perplexity to identify if the writing is human or AI-generated.

Text Perplexity: Explained

As per the research findings by Stanford University scholars, most AI detector tools use something called “text perplexity” to identify if a write-up is written by generative AI or not. Text perplexity –

- Identifies the sequence of words in a write-up.

- Analyses how difficult they are to predict by generative AI.

- Higher predictability is considered to be AI-generated.

- Lower predictability is considered human-generated.

Hence, this is why the GPT detector tools tend to flag the write-ups of non-native English writers. The reason is,

Non-native speakers tend to know less number of words

They use a few complex words and phrases and write short sentences.

They have little knowledge about sentence variations.

Putting the Theory into Practice

Now, let us take the following scenario, where an experienced writer is writing an article on a technical topic such as ‘Home Loan Interest’. Some common characteristics of such an article are –

- Little potential for creativity.

- Short sentences with straightforward syntax.

- Predictable sequence of words.

- Technical jargon with multiple pointers.

Such an article is easily flagged as AI-generated since the sequence of words is highly predictable by the GPT models.

That explains why the LLM-based AI detection tool fails to recognise AI-generated content. As we went on to remove certain lines and sections from the ChatGPT-generated blog, the text perplexity became higher; its predictions about the sequence of next words turned out invalid, and hence it identified the blog as a human-generated text.

Hire the best content writers and editors who can deliver top-notch quality blogs for your brand. Das Writing Services can deliver you customised content for your industry. Just drop your requirements at business@daswritingservices.com, and our team will connect with you.

How Does LLM Work?

LLMs learn by reading billions of sentences from books, websites, and articles. They pick up patterns in language, such as which words often appear together or how questions usually end.

They do not store exact sentences. Instead, they learn how language flows.

When you ask a question, the model builds a response one word at a time. It chooses the most likely words based on what it has learned.

The information comes from public sources like Wikipedia, news websites, and online forums.

There is no magic involved. It is simply large-scale pattern recognition that allows AI to write in a very human way.

Large Language Models, or LLMs, power tools like ChatGPT. They create natural-sounding text by predicting which words should come next in a sentence. You can think of them as very smart autocomplete systems that understand context.

Want to know how AI-driven search works in SEO? Read our detailed blog to learn more.

How is LLM Trained?

The AI language models are trained on millions of inputs already available in public forums such as –

- News

- Articles

- Reports

- Blogs

- Published books

- Research reports

These source materials were created by humans; so, naturally, besides learning the information, the LLM-based AI models are also learning the way humans use language. So, the AI language model replicates that pattern to produce comprehensible texts and materials.

The Results

Hence, the an extreme similarity between AI and human-generated content. The matter of concern is that AI language models are trained on deep learning neural networks. These machine learning models simulate the human brain in learning new information. They continue to evolve by themselves without any external inputs. So, the little mistakes, or lapses in writing, are only going to get further rectified with future updates of the generative language models.

Afterword

With the findings of our little experiment and existing research literature, it is safe to conclude that AI content detector tools are flawed and require human intervention if they are to be trusted.

Moreover, sometimes the tools do not even have access to the advanced features and resources that go into producing ChatGPT’s answers. So, chances remain high that either most tools fail to identify AI-generated content. Or worse, the tools might penalise humans by flagging their fruits of labour as AI-generated text.

Content agencies providing AI-written content can offer services at a very low cost. However, that content is harmful for your website as Google likes content that adds value to the reader. Contact Das Writing Services now and get well-researched and valuable content!

Subhodip Das is the founder and CEO of Das Writing Services Pvt. Ltd. He has an experience of 12 years in the field of Digital Marketing and specialises in Content Writing and Marketing Strategies. He has worked with well-established organisations and startups helping them achieve increased Search Engine Rank visibility. If you want to grow your business online, you can reach out to him here.

Leave a comment